What kind of chip will OpenAI make?

It is reported that OpenAI is developing customized AI accelerators with the help of Broadcom, apparently to reduce dependence on Nvidia and lower the cost of its GPT series models.

According to sources cited by the Financial Times, Hock Tan, CEO of Broadcom, revealed during Thursday's earnings conference call that Sam Altman's AI hype factory OpenAI is the mysterious client worth $10 billion.

Although Broadcom has not disclosed the habits of its clients, the company's intellectual property forms the basis for most customized cloud silicon wafers, which is an open secret.

Chen told analysts during Thursday's conference call that Broadcom is currently serving three XPU clients, and a fourth client is also coming soon.

He said, 'Last quarter, one potential customer placed a production order with Broadcom, so we listed them as a qualified customer for XPU. In fact, they have already received over $10 billion in AI rack orders based on our XPU.'. Given this, we now expect a significant improvement in the AI revenue outlook for fiscal year 2026 compared to the previous quarter's expectations. "

For some time now, there have been rumors that OpenAI is developing a chip internally to replace Nvidia and AMD GPUs. Sources told the Financial Times that this chip is expected to debut at some point next year, but it will mainly be used internally and will not be open to external customers.

Whether this means that the chip will be used for training rather than inference, or simply that it will provide support for OpenAI's inference and API servers, rather than accelerator based virtual machines (as Google and AWS have done for their TPU and Trainium accelerators), remains an unresolved question.

Although we don't know how OpenAI plans to use its first generation silicon wafers, Broadcom's involvement provides some clues to what it will ultimately look like.

Broadcom has produced a series of fundamental technologies required for building large-scale AI computing systems. These technologies cover everything from serializers/deserializers (SerDes) used to move data from one chip to another, network switches and co packaged optical interconnects required to scale from a single chip to thousands of chips, and 3D packaging technologies needed to build multi chip accelerators. If you are interested, we have thoroughly explored each technology here.

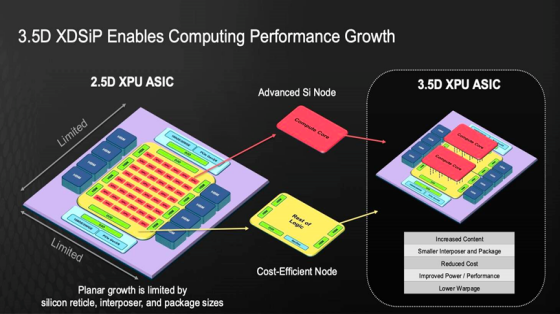

OpenAI may combine all of these technologies with Broadcom's 3.5D eXtreme Dimension System in Package technology (3.5D XDSiP), which is likely a candidate technology for the accelerator itself.

Broadcom's largest 3.5D XDSiP design will support one pair of 3D stacks, two I/O, and up to 12 HBM stacks on a single 6000 square millimeter package. The first batch of products is expected to start shipping next year, coinciding with the launch of OpenAI's first chip.

In addition to Broadcom's XDSiP technology, we would not be surprised if OpenAI utilizes Broadcom's Tomahawk 6 series switches and co packaged optical chips to achieve vertical and horizontal network expansion. We have discussed this topic in depth here before. However, Broadcom focuses on Ethernet as the preferred protocol for these two network paradigms, which means they don't have to use Broadcom for everything.

Although Broadcom's 3.5D XDSiP seems to be a possible candidate for OpenAI's first self-developed chip, it is not a complete solution in itself. This AI startup still needs to provide, or at least obtain authorization, a computing architecture equipped with high-performance matrix multiply accumulator (MAC) units (sometimes referred to as MME or Tensor cores).

The computing unit will require some other control logic, ideally some vector units, but for artificial intelligence, the most important thing is a sufficiently powerful matrix unit that can access sufficient high bandwidth memory.

As Broadcom will be responsible for providing almost everything else, OpenAI's chip team can fully focus on optimizing its internal workload computing architecture, making the entire process much less arduous.

That's why cloud providers and hyperscale enterprises tend to obtain a large number of accelerator design authorizations from commercial chip suppliers. Since you can reinvest these resources in your core competencies, there is no need to waste resources doing repetitive work.

Ultraman plans to invest hundreds of billions of dollars (mostly from others) into artificial intelligence infrastructure under his Stargate project, so it is not surprising that Broadcom's new $10 billion client will be OpenAI.

However, this startup is not the only company rumored to be collaborating with Broadcom to develop customized AI accelerators. You may remember that at the end of last year, The Information reported that Apple would become Broadcom's next XPU major customer, and its chip codenamed "Baltra" would be launched in 2026.

Since then, Apple has committed to investing $500 billion and hiring 20000 employees to enhance its domestic manufacturing capabilities. These investments include a manufacturing plant located in Texas that will produce AI accelerated servers based on Apple's self-developed chips.

Related Information

- 2025.05.12 Intel terminates Deep Link program