AI chip companies,more than 100

New research shows that the number of companies developing artificial intelligence processor chips has now exceeded one hundred.

Since OpenAI released ChatGPT two years ago, there has been a surge in interest in training and deploying artificial intelligence models, with graphics processing units (GPUs) becoming the preferred accelerator to drive all of these efforts, especially those produced by US chip company Nvidia.

However, in terms of artificial intelligence training or especially inference (the actual use of training models), these are not the only options, there are other available options, such as neural processing units (NPUs) embedded in certain desktop and laptop CPUs.

Other manufacturers also want to join this ranks, perhaps considering Nvidia became the first publicly traded company with a market value exceeding $4 trillion last month.

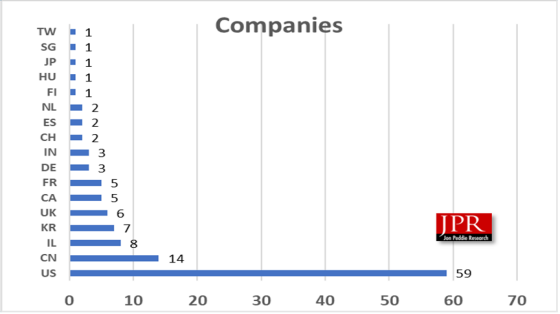

According to Jon Peddie Research (JPR), there are currently no less than 121 companies discussing the production of AI processors, ranging from microchips for embedded applications and IoT level devices to ultra large scale data center products.

JPR's Q3 2025 Artificial Intelligence Processor Market Development Report found that, as expected, the United States is currently in a leading position, with at least 59 companies from countries where tariffs have not yet been fully lifted. China lags behind with only 14 companies, while most other countries have at most a few companies.

JPR stated that although the United States is still in a leading position, China's DeepSeek and Huawei are continuing to push for their advanced chips, and India has also announced a domestic GPU plan with the goal of achieving production by 2029.

In the United States, California and Texas are hotbeds for development activities, with California having no less than 42 artificial intelligence chip companies in the country.

The research institution stated that all of these companies have attracted over $13.5 billion in startup funding, with many of them raising $100 million or more in just the past year.

But Dr. Jon Petty, the president of JPR, warned that a reshuffle in the coming years could lead to the elimination of many emerging artificial intelligence silicon wafer companies.

This reshuffling may come soon, as many clients are starting to reflect that the billions of dollars they have invested in artificial intelligence development have almost no return, and they believe (perhaps hope) that artificial intelligence will bring a leap in productivity, as recently reported by The Register.

In JPR's "AI Processor" report, it has been confirmed that 121 companies are currently developing or planning to develop AI processors, covering a range of products from micro IoT devices to ultra large scale data center accelerators. Since 2006, these companies have attracted over $13.5 billion in startup funding, with dozens of companies raising $100 million or more in just the past year.

Dr. Jon Peddie, President of JPR, stated that AI processors are experiencing a Cambrian explosion, reminiscent of the 3D graphics craze of the late 1990s and the XR wave of the 2010s. We expect rapid integration in the coming years, and the 121 companies we currently track will be reduced to approximately 25 by the end of 2020. "

The United States is currently leading in the field of artificial intelligence hardware and software, but this leading position is facing threats. China's DeepSeek and Huawei continue to advance advanced chip research and development, India has announced an independent GPU project aimed at achieving mass production by 2029, and Washington's policy shift is reshaping the competitive landscape. In the second quarter, the lifting of export restrictions allowed American companies such as Nvidia and AMD to reach billions of dollars in deals in Saudi Arabia.

JPR's database covers 121 companies located in major technology centers, covering the entire market and categorizing suppliers into five types:

1.AI IoT - Ultra low power inference in microcontrollers or small SoCs (TinyML class). High production volume (hundreds of millions), but low average selling price.

2.AI Edge - inference on or near devices within the 1-100W range outside the data center. Including robots, intelligent cameras, and industrial AI gateways.

3.AI Automotive - Unlike AI Edge, it has different economics and design cycles (ADAS and autonomous driving calculations).

4.AI Data Center Training - A high-end accelerator for LLM and model training. The output is low, but the average selling price is extremely high.

5.AI Data Center Reasoning - Super Large Scale Service for Large Scale AI Models. Mixed use of GPU, NPU, and custom ASIC.

They are scattered around the world.

Related Information

- 2025.05.12 Intel terminates Deep Link program